The Horseshoe Theory of Google Search

New generative-AI features are bringing the company back to basics.

Earlier today, Google presented a new vision for its flagship search engine, one that is uniquely tailored to the generative-AI moment. With advanced technology at its disposal, “Google will do the Googling for you,” Liz Reid, the company’s head of search, declared onstage at the company’s annual software conference.

Googling something rarely yields an immediate, definitive answer. You enter a query, confront a wall of blue links, open a zillion tabs, and wade through them to find the most relevant information. If that doesn’t work, you refine the search and start again. Now Google is rolling out “AI overviews” that might compile a map of “anniversary worthy” restaurants in Dallas sorted by ambiance (live music, rooftop patios, and the like), comb recipe websites to create meal plans, structure an introduction to an unfamiliar topic, and so on.

The various other generative-AI features shown today—code-writing tools, a new image-generating model, assistants for Google Workspace and Android phones—were buoyed by the usual claims about how AI will be able to automate or assist you with any task. But laced throughout the announcements seemed to be a veiled admission of generative AI’s shortcomings: The technology is great at synthesizing and recontextualizing information. It’s not the best at giving definitive answers. Perhaps as a result, the company seems to be hoping that generative AI can turn its search bar into a sort of educational aid—a tool to guide your inquiry rather than fully resolving it on its own.

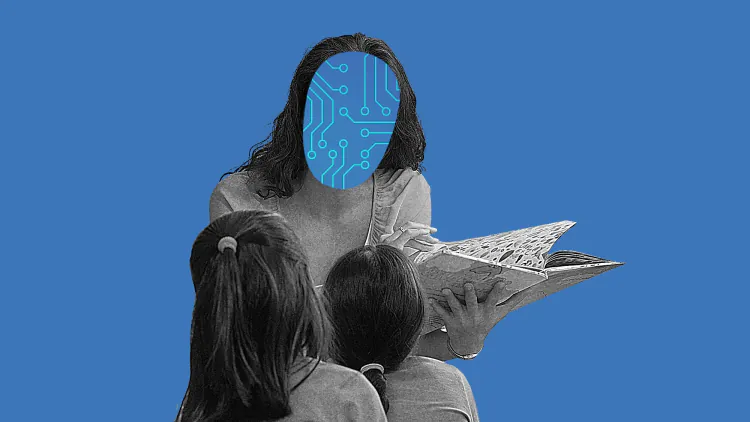

This mission was made explicit in the company’s introduction of LearnLM, a suite of AI models that will be integrated into Google Search, the stand-alone Gemini chatbot, and YouTube. You will soon be able to ask Gemini to make a “Simpler” search overview or “Break It Down” into digestible chunks, and to ask questions in the middle of academic YouTube videos such as recorded lectures. AI tools that can teach any subject, or explain any scientific paper, are also in the works. “Generative AI enables you to have an interactive experience with information that allows you to then imbibe it better,” Ben Gomes, the senior vice president of learning and the longtime head of search at Google, told me in an interview yesterday.

The obvious, immediate question that LearnLM, and Google’s entire suite of AI products, raises is: Why would anybody trust this technology to reliably plan their wedding anniversary, let alone teach their child? Generative AI is infamous for making things up and then authoritatively asserting them as truth. Google’s very first generative-AI demo involved such an error, sending the company’s stock cratering by 9 percent. Hallucinate, the term used when an AI model invents things, was Dictionary.com’s 2023 word of the year. Last month, the tech columnist Geoffrey Fowler pilloried Google’s AI-powered-search experiment as a product that “makes up facts, misinterprets questions, delivers out-of-date information and just generally blathers on.” Needless to say, an SAT tutor who occasionally hallucinates that the square root of 16 is five will not be an SAT tutor for long.

[Read: The tragedy of Google Search]

There are, in fairness, a plethora of techniques that Google and other companies use in an attempt to ground AI outputs in established facts. Google and Bing searches that use AI provide long lists of footnotes and links (although these host their own share of scams and unreliable sources). But the search giant’s announcements today, and my interview yesterday, suggest that the company is resolving these problems in part by reframing the role of AI altogether. As Gomes told me, generative AI can serve as a “learning companion,” a technology that can “stimulate curiosity” rather than deliver one final answer.

The LearnLM models, Gomes said, are being designed to point people to outside sources, so they can get “information from multiple perspectives” and “verify in multiple places that this is exactly what you want.” The LearnLM tools can simplify and help explain concepts in a dialogue, but they are not designed to be arbiters of truth. Rather, Gomes wants the AI to push people toward the educators and creators that already exist on the internet. “That’s the best way of building trust,” he said.

[Read: What to do about the junkification of the internet]

This strategy extends to the other AI features Google is bringing to search too. The AI overviews, Gomes told me, “rely heavily on pointing you back to web resources for you to be able to verify that the information is correct.” Google’s three unique advantages over competing products, Reid said at the conference, are its access to real-time information, advanced ranking algorithms, and Gemini. The majority of Google Search’s value, in other words, has nothing to do with generative AI; instead, it comes from the information online that Google can already pull up, and which a chatbot can simply translate into a digestible format. Again and again, the conference returned to Gemini’s access to the highest-quality real-time information. That’s not omniscience; it’s the ability to tap into Google’s preexisting index of the web.

That’s arguably what generative AI is best designed for. These algorithms are trained to find statistical patterns and predict words in a sentence, not discern fact from falsehood. That makes them potentially great at linking unrelated ideas, simplifying concepts, devising mnemonics, or pointing users to other content on the web. Every AI overview is “complete with a range of perspectives and links to dive deeper,” Reid said—that is, a better-formatted and more relevant version of the wall of blue links that Google has served for decades.

Generative AI, then, is in some ways providing a return to what Google Search was before the company infused it with product marketing and snippets and sidebars and Wikipedia extracts—all of which have arguably contributed to the degradation of the product. The AI-powered searches that Google executives described didn’t seem like going to an oracle so much as a more pleasant version of Google: pulling together the relevant tabs, pointing you to the most useful links, and perhaps even encouraging you to click on them.

Maybe Gemini can help sort through the keyword-stuffed junk that has afflicted the search engine. Certainly, that is the purpose of the educational AI that Gomes told me about. A chatbot, in this more humble form, will streamline but not upend the work of searching, and in turn learning.

What's Your Reaction?