Silicon Valley’s ‘Audacity Crisis’

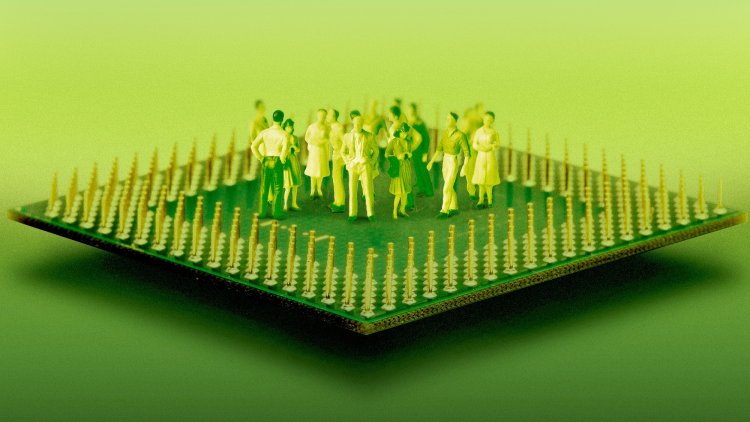

AI executives are acting like they own the world.

Two years ago, OpenAI released the public beta of DALL-E 2, an image-generation tool that immediately signified that we’d entered a new technological era. Trained off a huge body of data, DALL-E 2 produced unsettlingly good, delightful, and frequently unexpected outputs; my Twitter feed filled up with images derived from prompts such as close-up photo of brushing teeth with toothbrush covered with nacho cheese. Suddenly, it seemed as though machines could create just about anything in response to simple prompts.

You likely know the story from there: A few months later, ChatGPT arrived, millions of people started using it, the student essay was pronounced dead, Web3 entrepreneurs nearly broke their ankles scrambling to pivot their companies to AI, and the technology industry was consumed by hype. The generative-AI revolution began in earnest.

Where has it gotten us? Although enthusiasts eagerly use the technology to boost productivity and automate busywork, the drawbacks are also impossible to ignore. Social networks such as Facebook have been flooded with bizarre AI-generated slop images; search engines are floundering, trying to index an internet awash in hastily assembled, chatbot-written articles. Generative AI, we know for sure now, has been trained without permission on copyrighted media, which makes it all the more galling that the technology is competing against creative people for jobs and online attention; a backlash against AI companies scraping the internet for training data is in full swing.

Yet these companies, emboldened by the success of their products and war chests of investor capital, have brushed these problems aside and unapologetically embraced a manifest-destiny attitude toward their technologies. Some of these firms are, in no uncertain terms, trying to rewrite the rules of society by doing whatever they can to create a godlike superintelligence (also known as artificial general intelligence, or AGI). Others seem more interested in using generative AI to build tools that repurpose others’ creative work with little to no citation. In recent months, leaders within the AI industry are more brazenly expressing a paternalistic attitude about how the future will look—including who will win (those who embrace their technology) and who will be left behind (those who do not). They’re not asking us; they’re telling us. As the journalist Joss Fong commented recently, “There’s an audacity crisis happening in California.”

There are material concerns to contend with here. It is audacious to massively jeopardize your net-zero climate commitment in favor of advancing a technology that has told people to eat rocks, yet Google appears to have done just that, according to its latest environmental report. (In an emailed statement, a Google spokesperson, Corina Standiford, said that the company remains “dedicated to the sustainability goals we’ve set,” including reaching net-zero emissions by 2030. According to the report, its emissions grew 13 percent in 2023, in large part because of the energy demands of generative AI.) And it is certainly audacious for companies such as Perplexity to use third-party tools to harvest information while ignoring long-standing online protocols that prevent websites from being scraped and having their content stolen.

But I’ve found the rhetoric from AI leaders to be especially exasperating. This month, I spoke with OpenAI CEO Sam Altman and Thrive Global CEO Arianna Huffington after they announced their intention to build an AI health coach. The pair explicitly compared their nonexistent product to the New Deal. (They suggested that their product—so theoretical, they could not tell me whether it would be an app or not—could quickly become part of the health-care system’s critical infrastructure.) But this audacity is about more than just grandiose press releases. In an interview at Dartmouth College last month, OpenAI’s chief technology officer, Mira Murati, discussed AI’s effects on labor, saying that, as a result of generative AI, “some creative jobs maybe will go away, but maybe they shouldn’t have been there in the first place.” She added later that “strictly repetitive” jobs are also likely on the chopping block. Her candor appears emblematic of OpenAI’s very mission, which straightforwardly seeks to develop an intelligence capable of “turbocharging the global economy.” Jobs that can be replaced, her words suggested, aren’t just unworthy: They should never have existed. In the long arc of technological change, this may be true—human operators of elevators, traffic signals, and telephones eventually gave way to automation—but that doesn’t mean that catastrophic job loss across several industries simultaneously is economically or morally acceptable.

[Read: AI has become a technology of faith]

Along these lines, Altman has said that generative AI will “create entirely new jobs.” Other tech boosters have said the same. But if you listen closely, their language is cold and unsettling, offering insight into the kinds of labor that these people value—and, by extension, the kinds that they don’t. Altman has spoken of AGI possibly replacing the “the median human” worker’s labor—giving the impression that the least exceptional among us might be sacrificed in the name of progress.

Even some inside the industry have expressed alarm at those in charge of this technology’s future. Last month, Leopold Aschenbrenner, a former OpenAI employee, wrote a 165-page essay series warning readers about what’s being built in San Francisco. “Few have the faintest glimmer of what is about to hit them,” Aschenbrenner, who was reportedly fired this year for leaking company information, wrote. In Aschenbrenner’s reckoning, he and “perhaps a few hundred people, most of them in San Francisco and the AI labs,” have the “situational awareness” to anticipate the future, which will be marked by the arrival of AGI, geopolitical struggle, and radical cultural and economic change.

Aschenbrenner’s manifesto is a useful document in that it articulates how the architects of this technology see themselves: a small group of people bound together by their intellect, skill sets, and fate to help decide the shape of the future. Yet to read his treatise is to feel not FOMO, but alienation. The civilizational struggle he depicts bears little resemblance to the AI that the rest of us can see. “The fate of the world rests on these people,” he writes of the Silicon Valley cohort building AI systems. This is not a call to action or a proposal for input; it’s a statement of who is in charge.

Unlike me, Aschenbrenner believes that a superintelligence is coming, and coming soon. His treatise contains quite a bit of grand speculation about the potential for AI models to drastically improve from here. (Skeptics have strongly pushed back on this assessment.) But his primary concern is that too few people wield too much power. “I don’t think it can just be a small clique building this technology,” he told me recently when I asked why he wrote the treatise.

“I felt a sense of responsibility, by having ended up a part of this group, to tell people what they’re thinking,” he said, referring to the leaders at AI companies who believe they’re on the cusp of achieving AGI. “And again, they might be right or they might be wrong, but people deserve to hear it.” In our conversation, I found an unexpected overlap between us: Whether you believe that AI executives are delusional or genuinely on the verge of constructing a superintelligence, you should be concerned about how much power they’ve amassed.

Having a class of builders with deep ambitions is part of a healthy, progressive society. Great technologists are, by nature, imbued with an audacious spirit to push the bounds of what is possible—and that can be a very good thing for humanity indeed. None of this is to say that the technology is useless: AI undoubtedly has transformative potential (predicting how proteins fold is a genuine revelation, for example). But audacity can quickly turn into a liability when builders become untethered from reality, or when their hubris leads them to believe that it is their right to impose their values on the rest of us, in return for building God.

[Read: This is what it looks like when AI eats the world]

An industry is what it produces, and in 2024, these executive pronouncements and brazen actions, taken together, are the actual state of the artificial-intelligence industry two years into its latest revolution. The apocalyptic visions, the looming nature of superintelligence, and the struggle for the future of humanity—all of these narratives are not facts but hypotheticals, however exciting, scary, or plausible.

When you strip all of that away and focus on what’s really there and what’s really being said, the message is clear: These companies wish to be left alone to “scale in peace,” a phrase that SSI, a new AI company co-founded by Ilya Sutskever, formerly OpenAI’s chief scientist, used with no trace of self-awareness in announcing his company’s mission. (“SSI” stands for “safe superintelligence,” of course.) To do that, they’ll need to commandeer all creative resources—to eminent-domain the entire internet. The stakes demand it. We’re to trust that they will build these tools safely, implement them responsibly, and share the wealth of their creations. We’re to trust their values—about the labor that’s valuable and the creative pursuits that ought to exist—as they remake the world in their image. We’re to trust them because they are smart. We’re to trust them as they achieve global scale with a technology that they say will be among the most disruptive in all of human history. Because they have seen the future, and because history has delivered them to this societal hinge point, marrying ambition and talent with just enough raw computing power to create God. To deny them this right is reckless, but also futile.

It’s possible, then, that generative AI’s chief export is not image slop, voice clones, or lorem ipsum chatbot bullshit but instead unearned, entitled audacity. Yet another example of AI producing hallucinations—not in the machines, but in the people who build them.

What's Your Reaction?