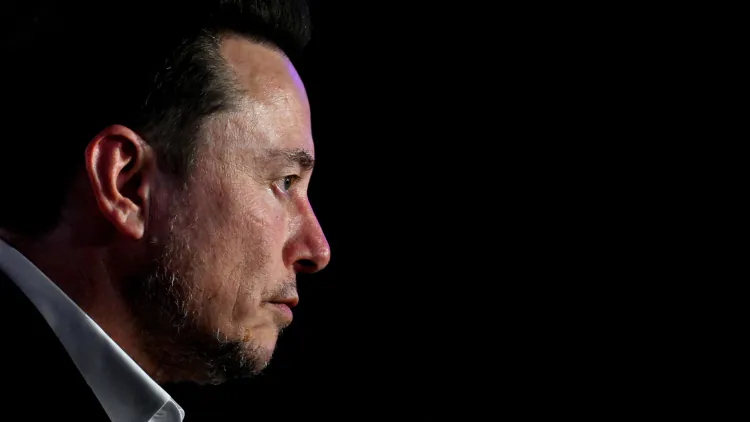

Elon Musk Just Added a Wrinkle to the AI Race

Transparency, or the appearance of it, is the technology’s new norm.

Yesterday afternoon, Elon Musk fired the latest shot in his feud with OpenAI: His new AI venture, xAI, now allows anyone to download and use the computer code for its flagship software. No fees, no restrictions, just Grok, a large language model that Musk has positioned against OpenAI’s GPT-4, the model powering the most advanced version of ChatGPT.

Sharing Grok’s code is a thinly veiled provocation. Musk was one of OpenAI’s original backers. He left in 2018 and recently sued for breach of contract, arguing that the start-up and its CEO, Sam Altman, have betrayed the organization’s founding principles in pursuit of profit, transforming a utopian vision of technology that “benefits all of humanity” into yet another opaque corporation. Musk has spent the past few weeks calling the secretive firm “ClosedAI.”

It’s a mediocre zinger at best, but he does have a point. OpenAI does not share much about its inner workings, it added a “capped-profit” subsidiary in 2019 that expanded the company’s remit beyond the public interest, and it’s valued at $80 billion or more. Meanwhile, more and more AI competitors are freely distributing their products’ code. Meta, Google, Amazon, Microsoft, and Apple—all companies with fortunes built on proprietary software and gadgets—have either released the code for various open-AI models or partnered with start-ups that have done so. Such “open source” releases, in theory, allow academics, regulators, the public, and start-ups to download, test, and adapt AI models for their own purposes. Grok’s release, then, marks not only a flash point in a battle between companies but also, perhaps, a turning point across the industry. OpenAI’s commitment to secrecy is starting to seem like an anachronism.

This tension between secrecy and transparency has animated much of the debate around generative AI since ChatGPT arrived, in late 2022. If the technology does genuinely represent an existential threat to humanity, as some believe, is the risk increased or decreased depending on how many people can access the relevant code? Doomsday scenarios aside, if AI agents and assistants become as commonly used as Google Search or Siri, who should have the power to steer and scrutinize that transformation? Open-sourcing advocates, a group that now seemingly includes Musk, argue that the public should be able to look under the hood to rigorously test AI for both civilization-ending threats and the less fantastical biases and flaws plaguing the technology today. Better that than leaving all the decision making to Big Tech.

OpenAI, for its part, has provided a consistent explanation for why it began raising enormous amounts of money and stopped sharing its code: Building AI became incredibly expensive, and the prospect of unleashing its underlying programming became incredibly dangerous. The company has said that releasing full products, such as ChatGPT, or even just demos, such as one for the video-generating Sora program, is enough to ensure that future AI will be safer and more useful. And in response to Musk’s lawsuit, OpenAI published snippets of old emails suggesting that Musk explicitly agreed with these justifications, going so far as to suggest a merger with Tesla in early 2018 as a way to meet the technology’s future costs.

Those costs represent a different argument for open-sourcing: Publicly available code can enable competition by allowing smaller companies or independent developers to build AI products without having to engineer their own models from scratch, which can be prohibitively expensive for anyone but a few ultra-wealthy companies and billionaires. But both approaches—getting investments from tech companies, as OpenAI has done, or having tech companies open up their baseline AI models—are in some sense sides of the same coin: ways to overcome the technology’s tremendous capital requirements that will not, on their own, redistribute that capital.

[Read: There was never such a thing as “open” AI]

For the most part, when companies release AI code, they withhold certain crucial aspects; xAI has not shared Grok’s training data, for example. Without training data, it’s hard to investigate why an AI model exhibits certain biases or limitations, and it’s impossible to know if its creator violated copyright law. And without insight into a model’s production—technical details about how the final code came to be—it’s much harder to glean anything about the underlying science. Even with publicly available training data, AI systems are simply too massive and computationally demanding for most nonprofits and universities, let alone individuals, to download and run. (A standard laptop has too little storage to even download Grok.) xAI, Google, Amazon, and all the rest are not telling you how to build an industry-leading chatbot, much less giving you the resources to do so. Openness is as much about branding as it is about values. Indeed, in a recent earnings call, Mark Zuckerberg did not mince words about why openness is good business: It encourages researchers and developers to use, and improve, Meta products.

[Read: OpenAI’s Sora is a total mystery]

Numerous start-ups and academic collaborations are releasing open code, training data, and robust documentation alongside their AI products. But Big Tech companies tend to keep a tight lid. Meta’s flagship model, Llama 2, is free to download and use—but its policies forbid deploying it to improve another AI language model or to develop an application with more than 700 million monthly users. Such uses would, of course, represent actual competition with Meta. Google’s most advanced AI offerings are still proprietary; Microsoft has supported open-source projects, but OpenAI’s GPT-4 remains central to its offerings.

Regardless of the philosophical debate over safety, the fundamental reason for the closed approach of OpenAI, compared with the growing openness of the tech behemoths, might simply be its size. Trillion-dollar companies can afford to put AI code in the world, knowing that different products and integrating AI into those products—bringing AI to Gmail or Microsoft Outlook—are where profits lie. xAI has the direct backing of one of the richest people in the world, and its software could be worked into X (formerly Twitter) features and Tesla cars. Other start-ups, meanwhile, have to keep their competitive advantage under wraps. Only when openness and profit come into conflict will we get a glimpse of these companies’ true motivations.

What's Your Reaction?